Why CLIKA’s Auto Lightweight AI Toolkit is the Key to Unlocking Hardware-Aware AI

Recent advances in artificial intelligence (AI) research have democratized access to models like ChatGPT. While this is good news in that it has urged organizations and companies to start their own AI projects either to improve business operations or reduce overhead cost, the incorporation of it have also come with a host of other problems that has made realizing the full potential of AI difficult. Some of these problems include high operation costs and the inherent difficulty of deploying these models on different hardware and architectures for production.

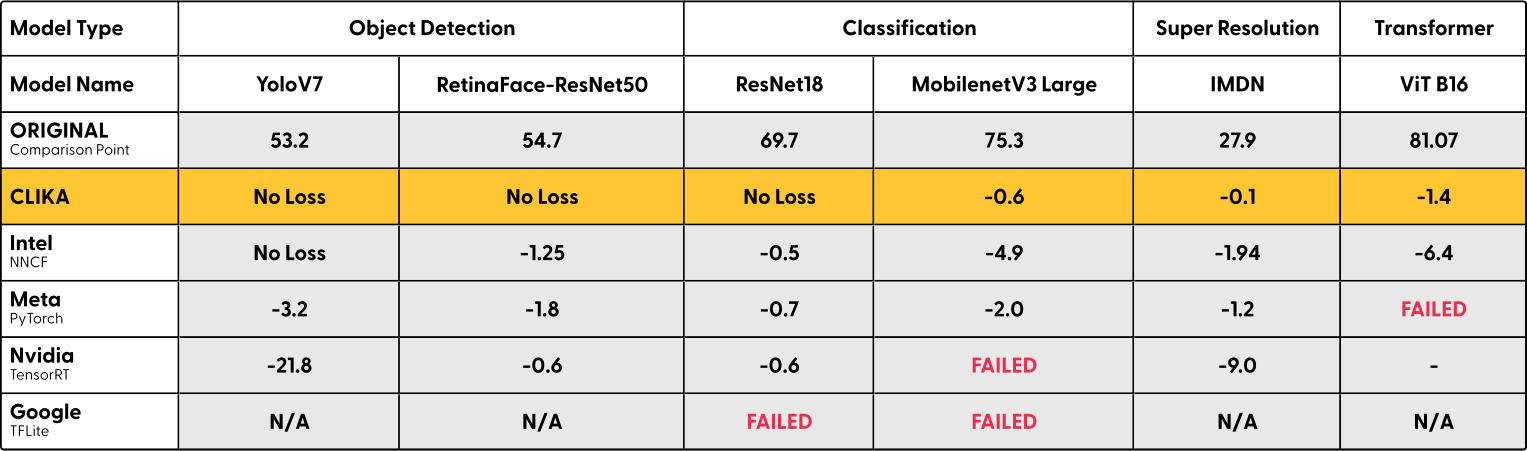

These problems arise partly because AI engineers often build models without fully considering the deployability of their models on the target hardware. Model deployment is usually an internally-developed process which is executed on different computers or servers in varying environments or locations. Engineers often use either free or open-source solutions like NVIDIA’s TensorRT, Meta’s PyTorch or Google’s TFLite to optimize these models prior to deploying. This entire process is not only failure-prone, manual and cumbersome, but also often requires frequent back-and-forth iteration between the AI model building side of things and the hardware restrictions.

But even for models that are destined for cloud deployment, where the process of deployment can be less onerous, these models become very expensive to operate at scale. In the long run, these issues can potentially outweigh the benefits of adopting an AI-based solution in the first place.

“Most AI projects fail. Some estimates place the failure rate as high as 80%.”

- Harvard Business Review

So, it really does not come as a surprise that businesses at various stages of growth, including enterprises, that are experimenting with some of the latest AI models, terminate these projects for exactly the reasons mentioned above:

- A visual content tech company with a team of 50+ people facing limitations in servicing and scaling their proprietary vision AI technology due to performance degradation

- A national chain restaurant that hoped to increase its profit margins with AI spending well over $2 million USD per year on cloud just to operate its AI solution

- A smart factory realizing that they are unable to productionize their AI solution because of its incompatibility with the target hardware for deployment

With different types of hardware becoming increasingly available today (and at affordable prices) and with big techs racing against each other to release the next state-of-the art (SOTA) model, we expect these problems to only grow with time. This is because certain types of hardware come with limited compute resources and limited support for layers and operators in a model.

Our solution to this problem is an auto lightweight AI toolkit that is also hardware-agnostic.

It is an easy-to-use solution even for engineers with limited work and technical experience. It is offered as a Software Development Kit (SDK), powered by our own compression technology: Auto Compression Engine (ACE). Our SDK simplifies this complex engineering workflow by automating the process from model compression to hardware deployment through format compilation. Created based on our proprietary compression algorithm, ACE reduces an AI model up to 95% in size while also enabling greater speed, accuracy and efficiency. More details on our compression benchmarks can be found here:

Our toolkit makes AI models lightweight in a matter of minutes, not weeks, saving an incredible amount of time, money and headaches for both engineers and businesses. With CLIKA, businesses can expect to save up to 80% in operation costs either from hardware purchase or cloud. CLIKA is here to embed devices with intelligence to hear, understand and see our surroundings better by bridging the gap between AI software and hardware.